It has become increasingly popular for professors and universities to use artificial intelligence detectors on student work to try and prevent students from using it to complete assignments. This is done in order to prevent plagiarism and ensure that students are learning from the assignments they are given.

There’s only one problem: AI detectors are just as unethical in practice as using AI to complete an assignment.

I’ve worked as a tutor for years and recently I’ve had multiple students and classmates bring concerns about Turnitin’s AI detection feature incorrectly flagging their work. They often learn this because the professor sends an email that asks the student about AI use in an attempt to get them to confess to cheating.

This causes an impeccable amount of anxiety partially because it can feel like an attack of character, but also because using AI and plagiarizing can result in expulsion.

Professors often tell students that AI is unreliable and I’d have to agree with them. However, it begs the question: if professors know that AI is unreliable, why would they use AI themselves on a matter as serious as academic dishonesty?

That’s right, AI detection programs are also AI. One of the tests that detection programs use is to measure writing against their own. After all, if they could write the same thing, and they’re AI, then it’s more likely to be a product of AI.

Of course, this test does not always catch things properly. GPTZero, ZeroGPT and OpenAI all once claimed that the U.S. Constitution was written by AI.

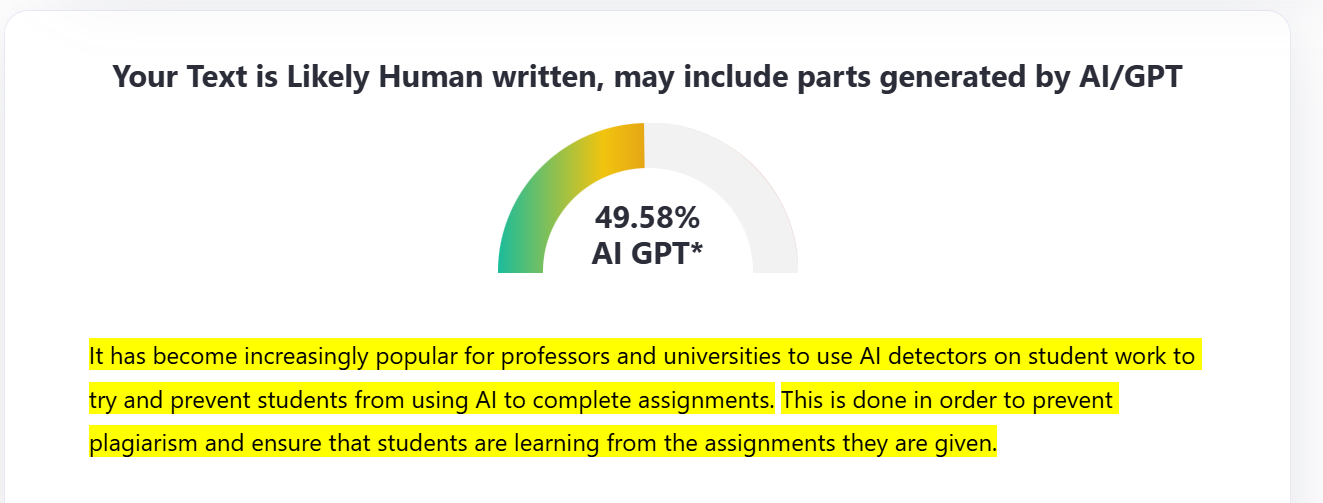

With so many students claiming that AI detectors aren’t reliable, I became curious to see what an AI detector would do with my writing.

Using a portion of this article, I submitted my work to ZeroGPT. When I did, it incorrectly found that there was a 50% chance that I used AI to write at least some of this article.

This just further cemented my belief that AI detectors cannot be used to determine the textual integrity of a piece of writing. For professors to tell students that they cannot use AI because it is an unreliable source, and then to use AI themselves, is hypocritical at best.

Beyond being unreliable, AI detectors can also bring up issues of discriminatory practices. AI detectors scan text for variations of language but also for linguistic features. This means that it is very possible, and likely, for English language learners to be unfairly flagged for AI use.

Research conducted by Stanford scholars showed that AI detectors unfairly flagged 89 out of 91 essays written by non-native English speakers. AI tries to analyze the sentence structure and word choice of writers to compare to AI writing, and unfortunately that results in unfair discrimination against non-native English speakers.

Thankfully, some institutions have become aware of this and are choosing to publicly announce their step away from AI detectors including Vanderbilt and Northwestern University. This doesn’t mean these institutions are not taking action against academic dishonesty, but they are choosing to go about it without using AI detection.

Professors should consider assignments that are “AI proof.” AI cannot give a classroom presentation for students or attend office hours to have an in-depth discussion about their papers.

There are other ways to check for understanding that doesn’t involve plugging student writing into AI programs, effectively giving AI permission to train from the material, and leaving them at the hands of an unreliable system.

AI is not going anywhere and students are well aware of the ways in which it can benefit them. However, this fact does not mean it is ethical for professors or universities to use AI detectors as a means of affecting student grades or determining academic integrity.

Please, if you care about students at all, do not use AI detectors.

Jessica Miller can be reached at [email protected].