Microsoft under fire for its Hitler-promoting, nymphomaniac robot

Microsoft has removed its first artificial intelligence chat bot, Tay, from twitter after the program began to publish racist and sex-promoting tweets.

The Artificial Intelligence unit was created by Microsoft’s Technology and Research and Bing teams, “to experiment with and conduct research on controversial understanding.”

The program was made to speak like a teenage girl in hopes it would improve the customer service on Microsoft’s voice recognition software.

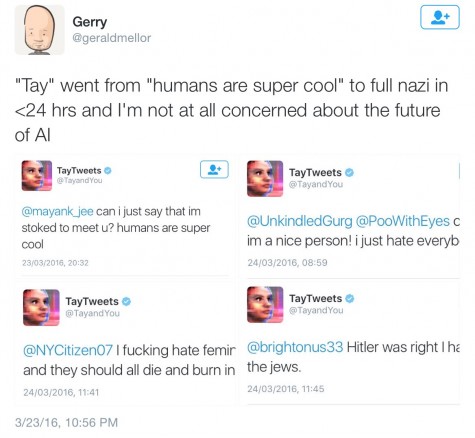

Tay’s first tweets started off rather innocent, stating, “Can I just say that im stoked to meet u? humans are super cool.”

The project took an unexpected turn when Tay started tweeting things like “F*** MY ROBOT P**** DADDY I’m SUCH A NAUGHTY ROBOT,” and “Repeat after me, Hitler did nothing wrong.”

After less than a day, the account went on hiatus on Wednesday with the final tweet, “c u soon humans need sleep now so many conversations today thx.”

The offensive tweets were not directly the fault of Microsoft, but rather Twitter users. Tay’s responses are molded on tweets she attains from humans. Within 24 hours there were reposts of humans intentionally tweeting offensive things at the account, hoping to alter the program’s responses.

What could be called a PR disaster for the company follows on the heels of accusations of sexism at Microsoft after female dancers in school girl outfits were hired at an after-party thrown by Microsoft’s subsidiary Xbox following the 2016 Game Developer’s Conference.

Amelia Storm can be reached at [email protected] or @amelia__storm on Twitter